There are many classification metrics that we can use to evaluate a model. Such as precision, recall, accuracy –which is one of the most used metrics-, and many others.

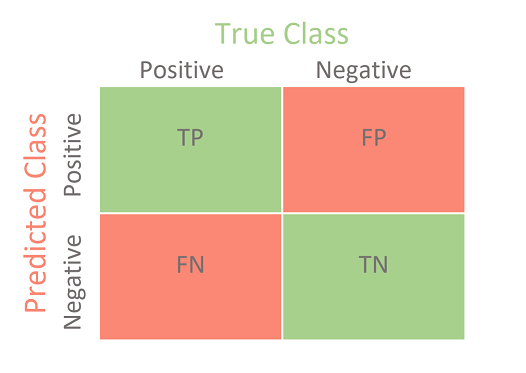

Let’s consider the following confusion matrix:

We can use confusion matrix to calculate different metrics.

Accuracy: The number of correct predictions our model made divided by the total number of predictions.

Accuracy = (TP+TN) / (TP+FP+FN+TN)

So can we say that the higher a model’s accuracy is, the better performance we got?

It depends on what we are predicting so if we are predicting whether an asteroid will hit the Earth or not the model can just always predict “NO” and the accuracy would be 99% but that is an inefficient model and we need to use other metrics to evaluate it.

Precision: The ratio of correctly predicted positive observations to the total predicted positive observations.

Precision = (TP) / (TP+FP)

Precision is not a proper metric for the asteroid model since we never predicted true positive (YES).

Recall: The proportion of correctly classified actual positives.

Recall = (TP) / (TP+FN)

Which is also not proper for the same reason as precision.

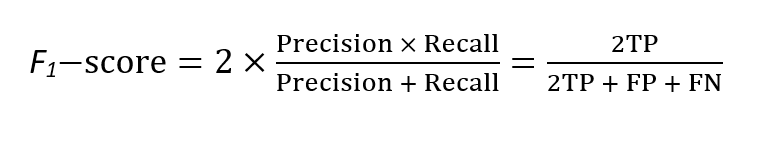

F1-score: The best metric for the previous model since it considers both precision and recall, it ranges between 0 and 1.

We can use classification report to generate the previous metrics for a model.

Check this article for more metrics and in-depth explanation.